Hi there! 👋 I am Yaxin Luo.

About Me

Decorative animated background

Hello! I am a First-Year Machine Learning PhD student at MBZUAI, advised by Prof. Zhiqiang Shen and Prof.Mohsen Guizani. I am also closely working with my friend Xiaofu Chen. My research vision centers on building Autonomous Agents that can perceive, reason, and act in both digital (GUI/Computer Use/Web) and physical environments. To achieve this, I focus on developing native Multimodal Foundation Models with unified understanding, reasoning, and generation capabilities—these models serve as the core intelligence that powers agentic systems to effectively execute complex real-world tasks.

Previously, I earned my Bachelor’s degree from Technical University of Denmark, where I was fortunate to be supervised by Prof. Dim P. Papadopoulos. Meanwhile, I was lucky to collarating with Dr.Gen Luo and Prof.Rongrong Ji on efficient deep learning researches during my bachelor. Earlier, I spent an intense and rewarding year at the University of Edinburgh studying pure mathematics and physics—an experience that sparked my passion for science and technology, deepened my curiosity about the unknown, I was curious and wanted to explore String Theory at that time, this one year ultimately shaped who I am today. Before Edinburgh, while enrolled in a Bio-Medicine program at the University of Queensland and preparing for the UCAT test to be addimitted into the university's medical school, I failed at the end. As I only focused on managing a high-street multi-brand boutique which was located in Brisbane‘s Southbank near the casino, and was far more focused on business than on study and research; that Edinburgh year changed my priorities and set me on a research path, thanks to the advice, encourage and supports of my academic personal tutor Prof.Ana Rita Pires when I was at Edinburgh. Anyway, all those past experiences have made me who I am today.

My research interests focus on:

- Autonomous Agents : Building intelligent agents capable of interacting with digital and physical environments just like humans. I focus on enabling multimodal models to perceive, reason, and execute complex workflows across GUIs (Computer Use/Web Agents) and IoT devices in Physical World (Agents that control your smart-home devices). The goal is to create reliable, generalist systems that automate real-world tasks—from software operation to smart home control—significantly boosting productivity and bridging the gap between AI reasoning and actionable execution.

- Multimodal Foundation Models : Developing native multimodal foundation models that perform unified understanding, reasoning, and generation across video, language, and speech. These models serve as the core intelligence—the "brain"—that powers autonomous agents, enabling them to ground perception in action for embodied AI, robotics, and beyond.

Recently, I am focusing on LLM's training data anatomy and on-device unified understanding & generation model.

News

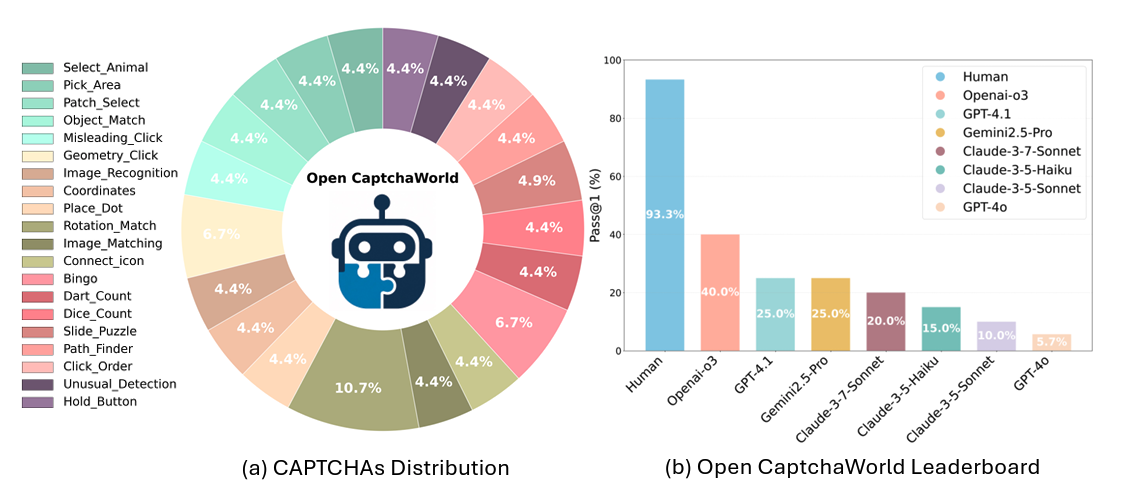

[2025-09-18] 🚀 OpenCaptchaWorld has been accepted by NeurIPS 2025.

Selected Publications

( * indicate equal contribution)

For full and up-to-date publication list, please refer to my Google Scholar page.

OpenCaptchaWorld: A Comprehensive Web-based Platform for Testing and Benchmarking Multimodal LLM Agents

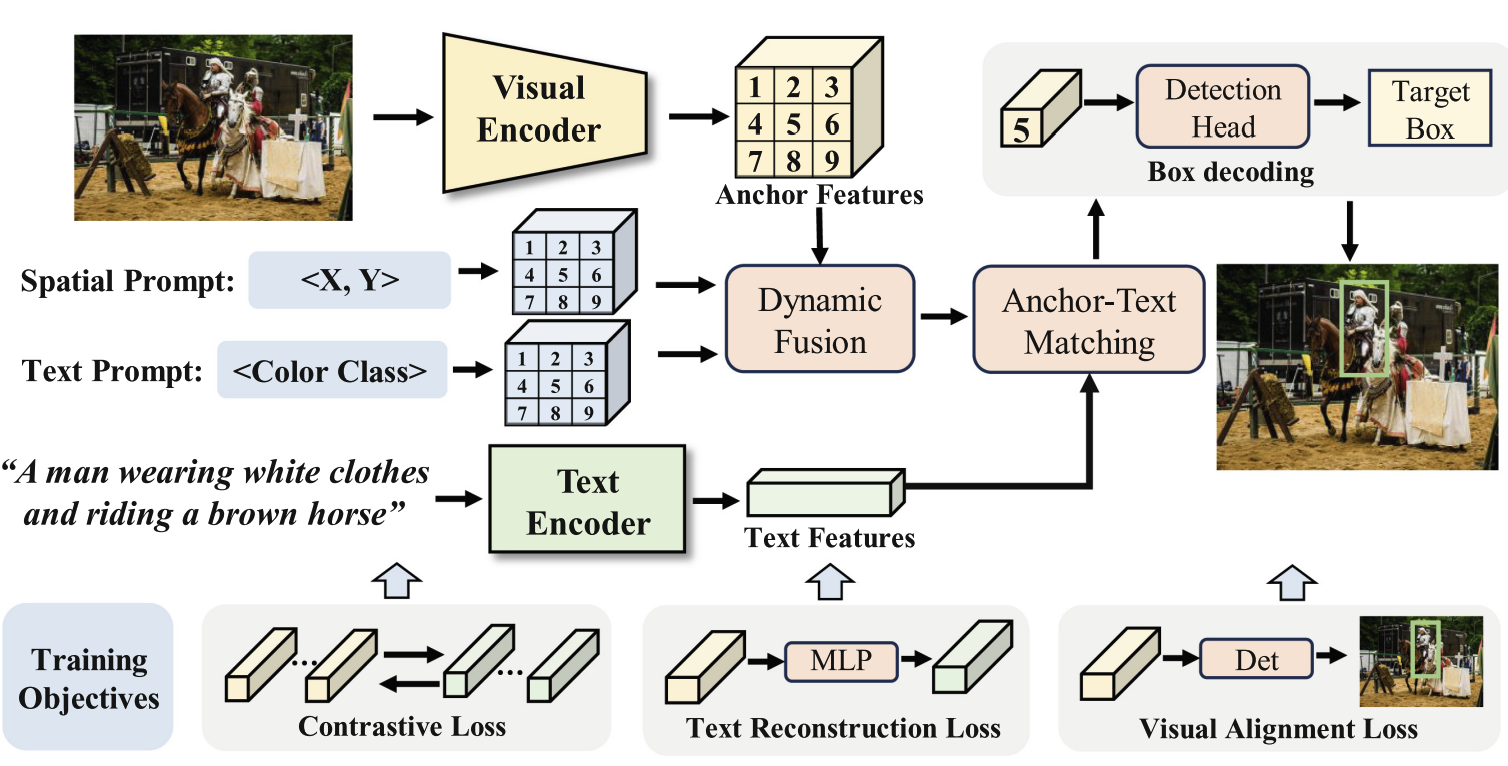

OpenCaptchaWorld: A Comprehensive Web-based Platform for Testing and Benchmarking Multimodal LLM Agents APL: Anchor-Based Prompt Learning for One-Stage Weakly Supervised Referring Expression Comprehension

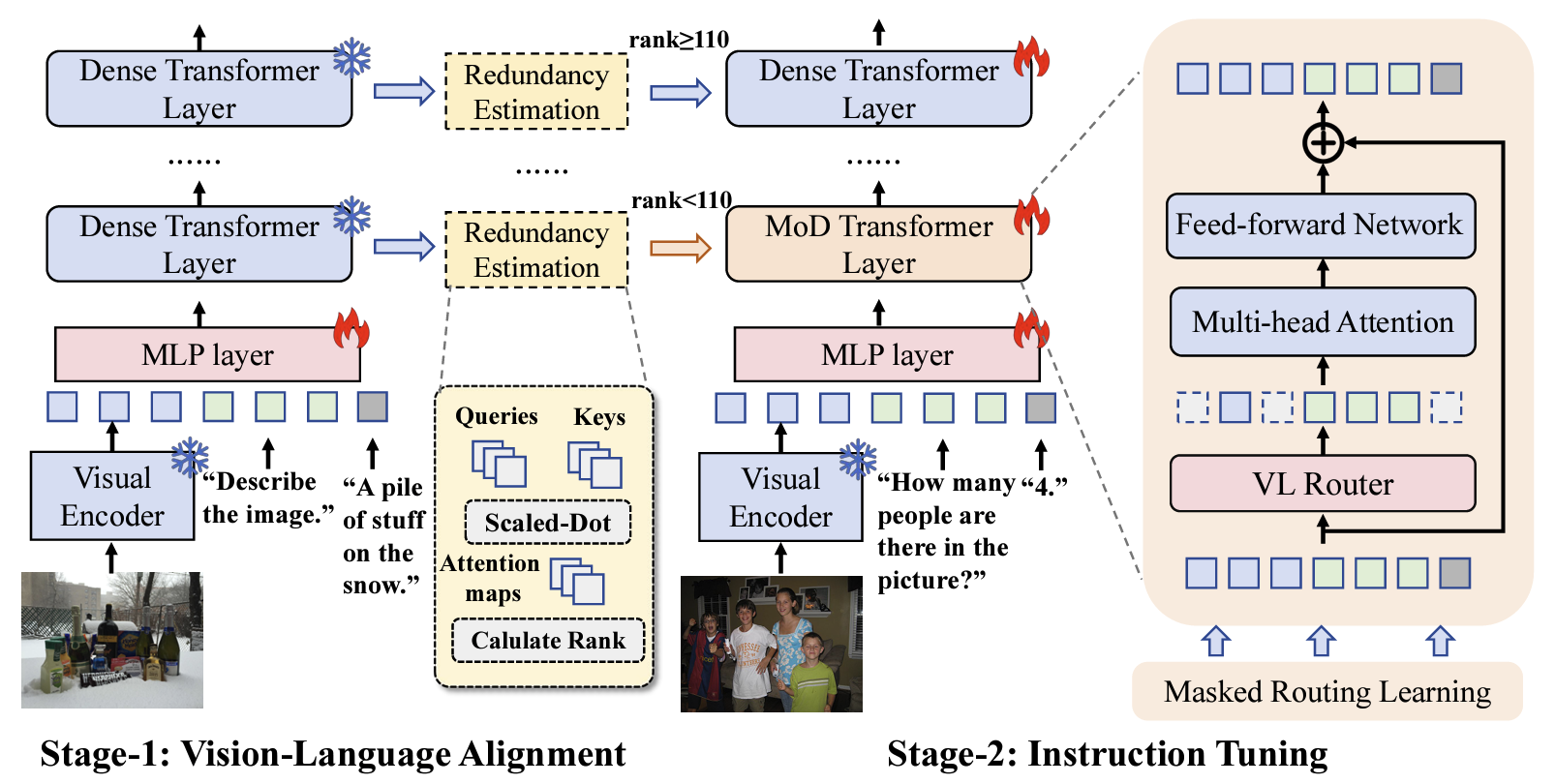

APL: Anchor-Based Prompt Learning for One-Stage Weakly Supervised Referring Expression Comprehension γ-MoD: Exploring Mixture-of-Depth Adaptation for Multimodal Large Language Models

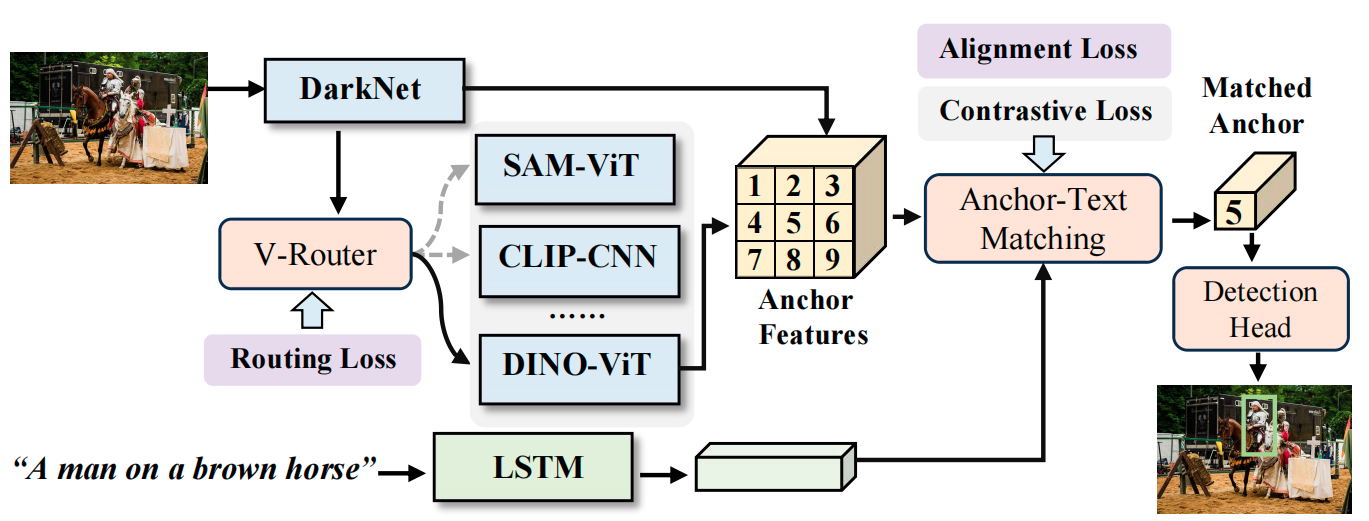

γ-MoD: Exploring Mixture-of-Depth Adaptation for Multimodal Large Language Models DViN: Dynamic Visual Routing Network for Weakly Supervised Referring Expression Comprehension

DViN: Dynamic Visual Routing Network for Weakly Supervised Referring Expression Comprehension